New Method Boosts Language Models' Financial Trend Predictions

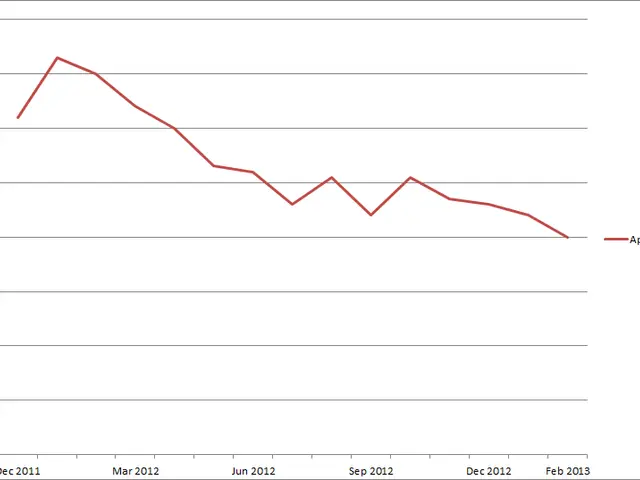

Two advanced language models, DeepSeek-14B and DeepSeek-14B-SFT, have been compared in predicting financial trends. The research introduces a novel method, Reflective Evidence Tuning (RETuning), to enhance the models' performance in this complex task.

Stock movement prediction is notoriously challenging for large language models (LLMs). They often rely on mimicking analyst opinions and struggle with conflicting evidence. A team of researchers has developed RETuning to address this issue.

The method encourages LLMs to build a robust analytical framework and make predictions based on logical reasoning. It serves as an effective 'cold-start' approach, unlocking the reasoning ability of the language model within the financial domain.

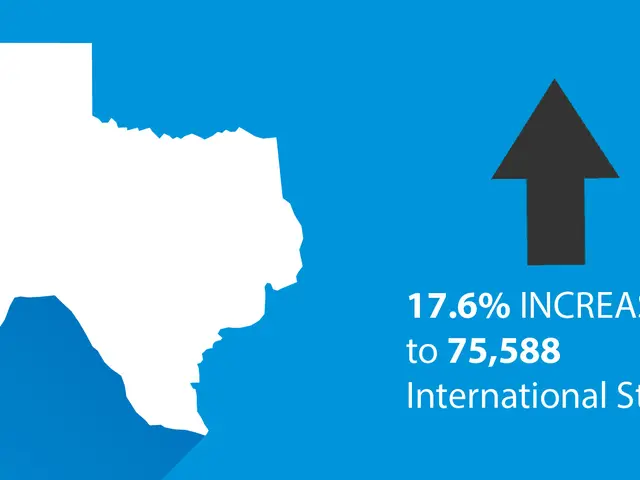

The team created the Fin-2024 dataset, covering all of 2024 for 5,123 stocks, integrating six key information sources. They validated the method on this large-scale dataset, demonstrating robust performance even on out-of-distribution stocks.

Experiments show that RETuning improves prediction accuracy and enables significant inference-time scalability. DeepSeek-14B-SFT, using this method, provides more concise and focused predictions, streamlining information for easier understanding.

The introduction of Reflective Evidence Tuning has significantly improved the performance of large language models in financial prediction tasks. The method's effectiveness has been validated on a comprehensive dataset, showing promise for real-world applications. Further research is expected to build on these findings.

Read also:

- India's Agriculture Minister Reviews Sector Progress Amid Heavy Rains, Crop Areas Up

- Cyprus, Kuwait Strengthen Strategic Partnership with Upcoming Ministerial Meeting

- Inspired & Paddy Power Extend Virtual Sports Partnership for UK & Ireland Retail

- South West & South East England: Check & Object to Lorry Operator Licensing Now